GitHub - jina-ai/executor-clip-encoder: Encoder that embeds documents using either the CLIP vision encoder or the CLIP text encoder, depending on the content type of the document.

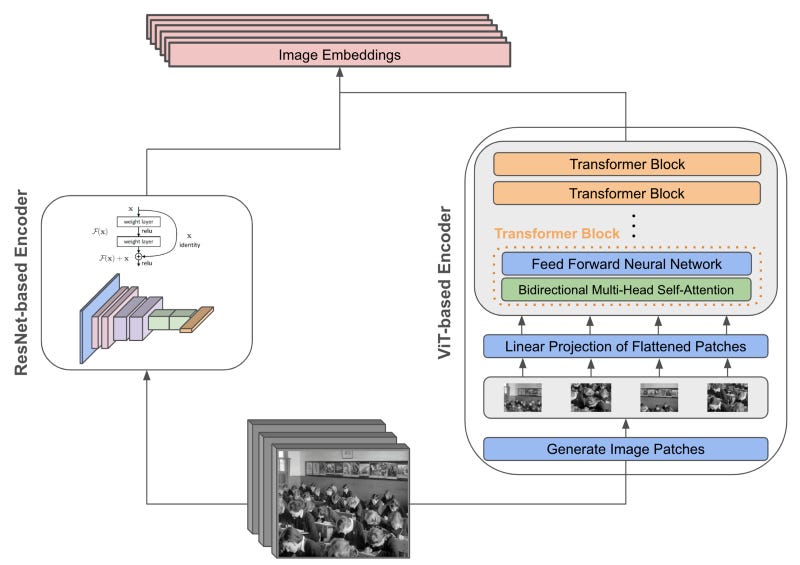

Sensors | Free Full-Text | Sleep CLIP: A Multimodal Sleep Staging Model Based on Sleep Signals and Sleep Staging Labels

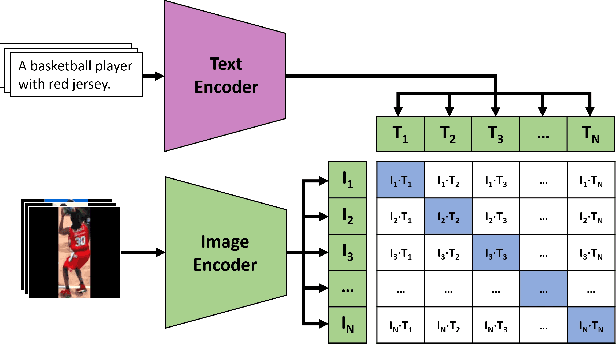

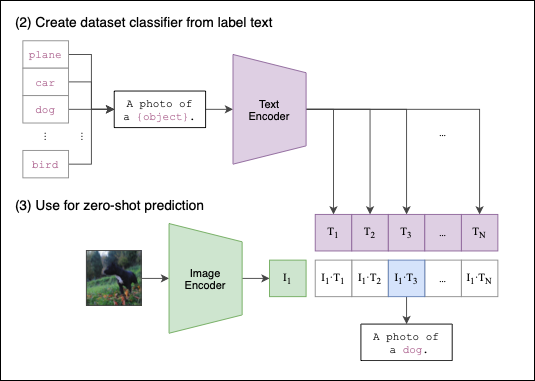

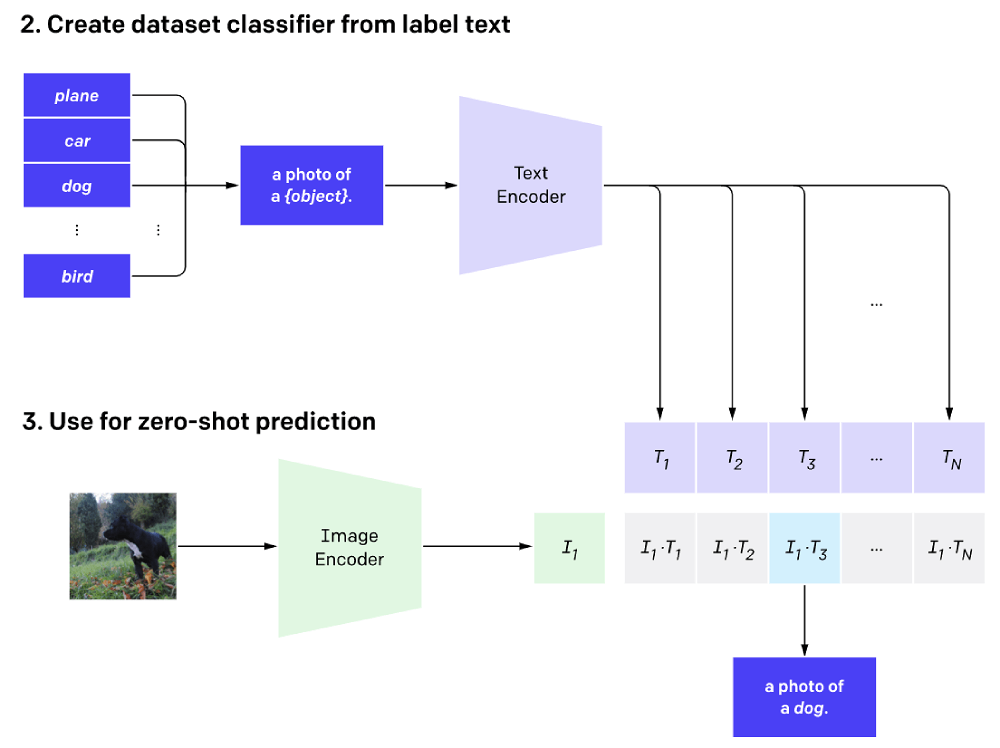

Example showing how the CLIP text encoder and image encoders are used... | Download Scientific Diagram

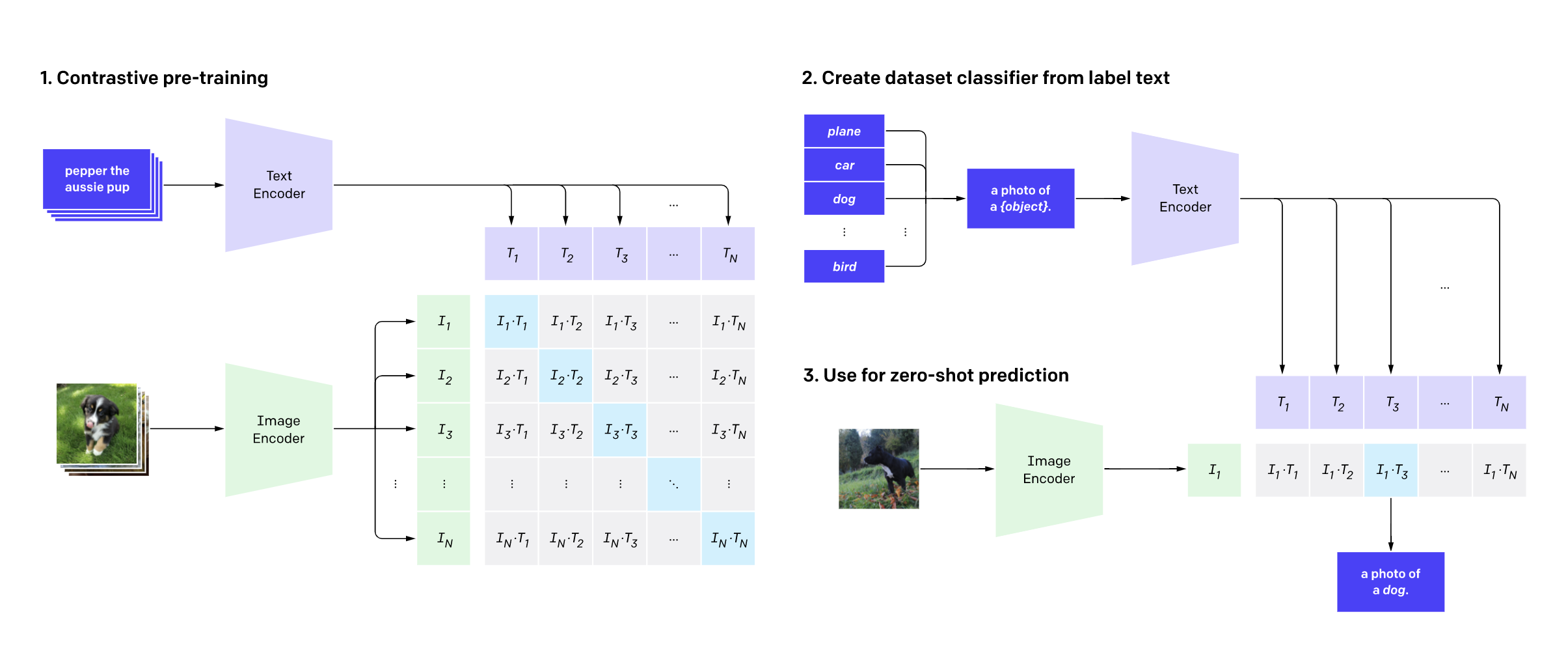

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

HYCYYFC Mini Motor Giunto a Doppia Membrana, Giunto Leggero con Montaggio a Clip da 2,13 Pollici for Encoder : Amazon.it: Commercio, Industria e Scienza

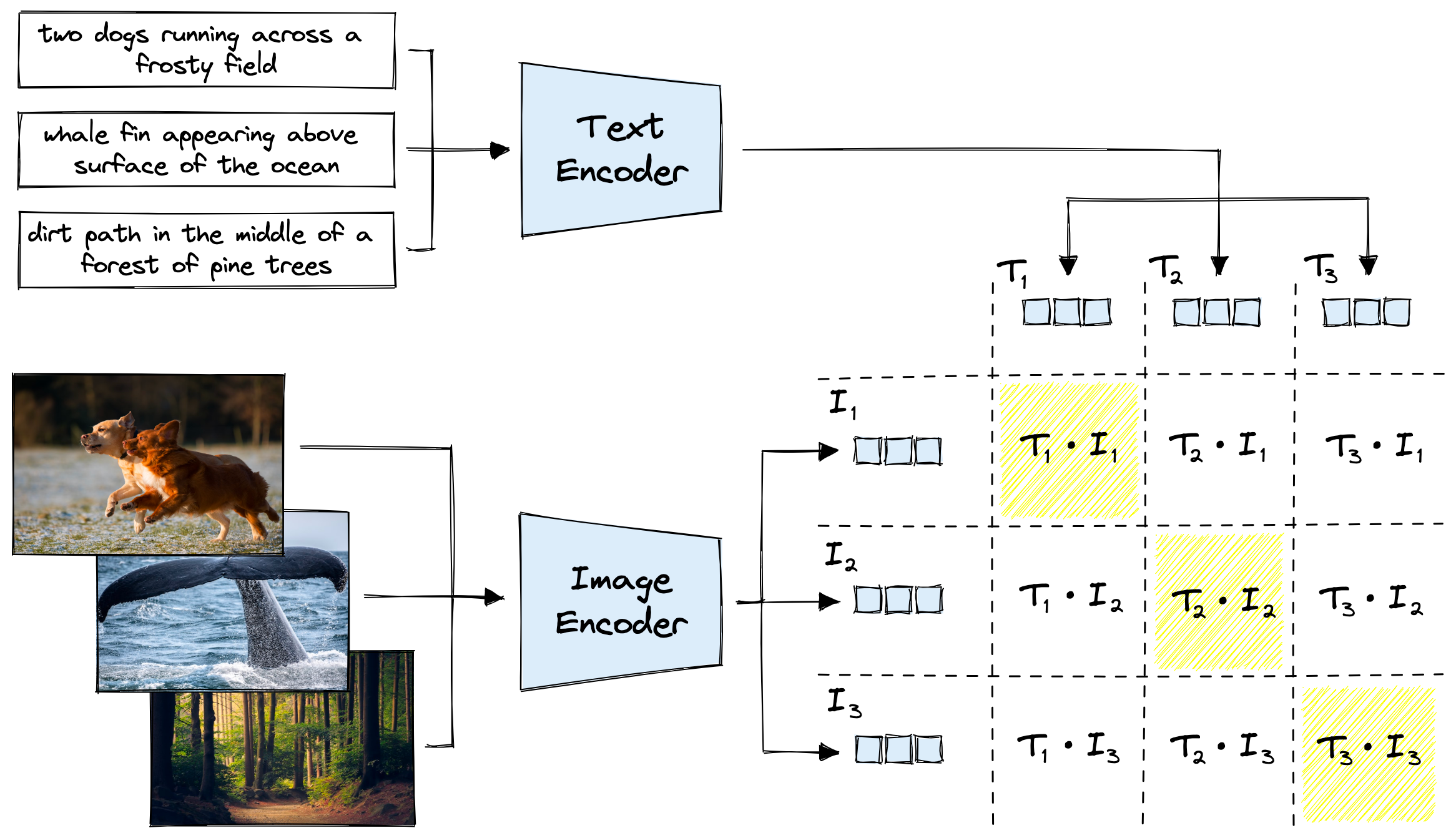

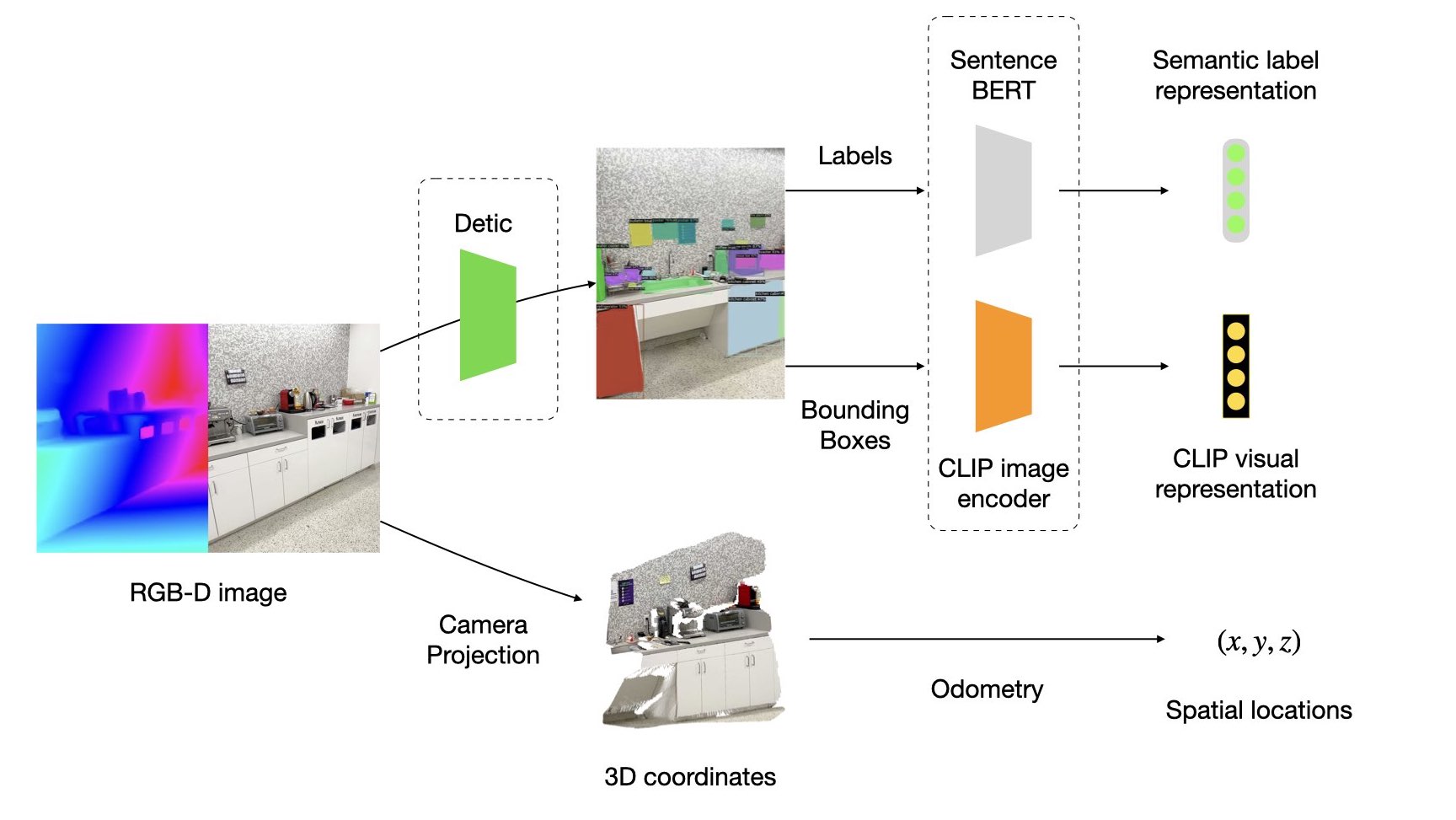

Process diagram of the CLIP model for our task. This figure is created... | Download Scientific Diagram

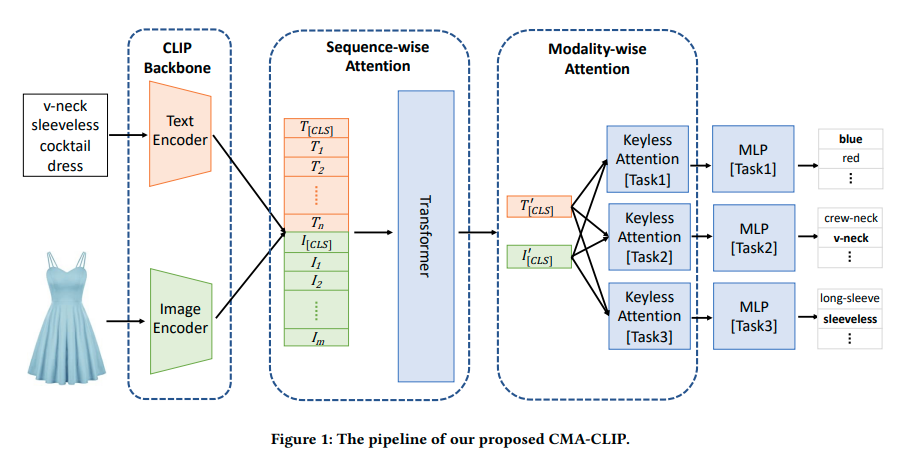

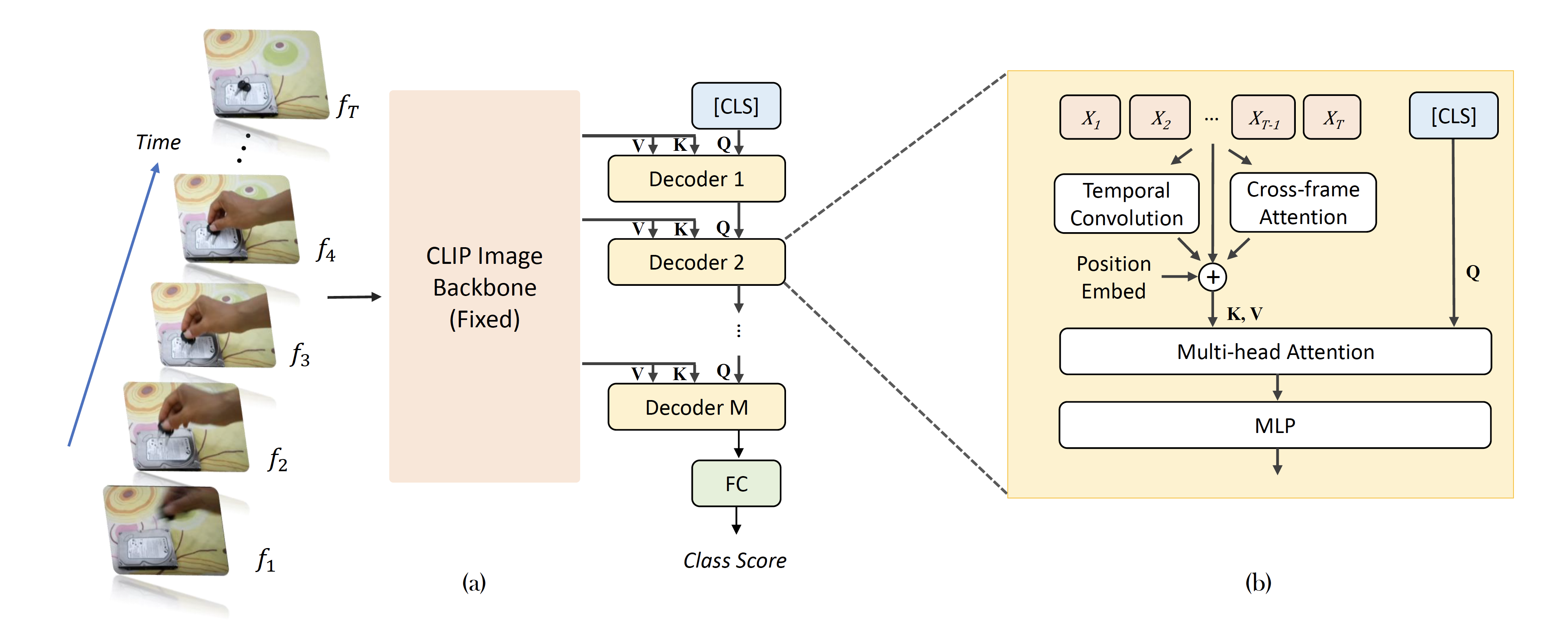

Proposed approach of CLIP with Multi-headed attention/Transformer Encoder. | Download Scientific Diagram

How do I decide on a text template for CoOp:CLIP? | AI-SCHOLAR | AI: (Artificial Intelligence) Articles and technical information media

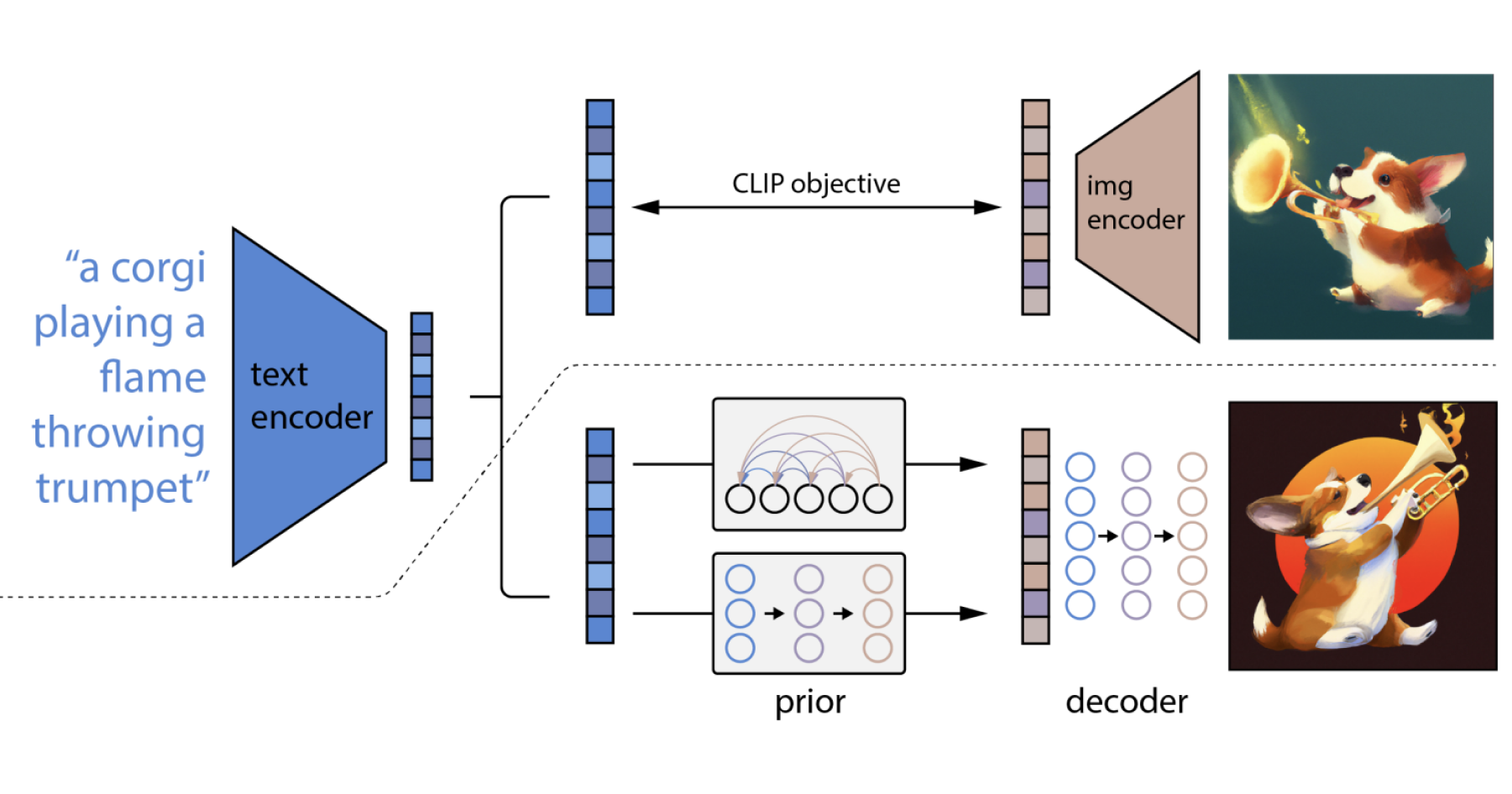

From DALL·E to Stable Diffusion: How Do Text-to-Image Generation Models Work? - Edge AI and Vision Alliance

Meet 'Chinese CLIP,' An Implementation of CLIP Pretrained on Large-Scale Chinese Datasets with Contrastive Learning - MarkTechPost

![P] [R] Pre-trained Multilingual-CLIP Encoders : r/MachineLearning P] [R] Pre-trained Multilingual-CLIP Encoders : r/MachineLearning](https://preview.redd.it/n4vkz1ceofo61.png?width=1739&format=png&auto=webp&s=bf33b2791d15b1af6e40d5fd5e5c3742d2b62ad2)